Introduction: When Intelligence Adapts to Geography

Artificial intelligence has become a very active participant in the world flow. As large language models (LLMs) deploy all around the world, there is an increase in the effect that the sociocultural, legal, and infrastructural context in which they operate have on the system. Even though these systems have been trained using vast, globally sourced datasets, the same questioning voice is not always created. The same model, when accessed in different parts of the world, can give responses that contradict each other in tone, framing, and even on the facts. This phenomenon is not the failure of generalization; it is the reflection of the diversity of the linguistic and regulatory environments of the Internet.

Of these regionalized content filters, a set is at the core of this variation. These filters are subtle, adaptive, and very inconspicuous to the user. They are manifested by the alignment processes which govern model behavior processes designed to ensure compliance with the laws of the place, minimize harm, and reflect cultural norms. Alignment, in this sense, is a kind of governance; a mechanism by which the policy and value judgments are transferred to the probability of language. Over the years, this has created an emergent geography of meaning in the context of generative AI, where the same model expresses itself differently in different parts of the globe, and this echoes the worldviews and sensitivities of those regions.

Not being able to comprehend this geography becomes essential in measuring the transparency and equity of AI systems worldwide. And the implications of models giving region-specific outputs go well beyond technical variation attributes in consideration of concerns over representation, fairness, and epistemic balance. A model that is adapted to the local context may increase accessibility and trust, but it can also reinforce boundaries in information framing or suppress expressions that go against local expectations. The difficulty, then, is to try to measure in a systematic way these regional adaptations and to interpret their linguistic patterns without embarking on a linguistic distinction of the norm.

This study presents an organizational means for measuring variation in AI regionally. Using a network of geographically distributed proxies, identical prompts were issued for a singular language model under some control. Each of the regional responses was examined under multiple dimensions of language: lexical (word choice and phrasing), semantic (conceptual meaning and framing of meaning), and composite divergence (combining lexical and semantic views). Together, these layers obtain a comic picture of how the model behavior would be impacted if the communication is filtered through regional conditions.

The goal here is to empirically characterize the role of geography in the expression of generating creative practices. By visualizing differences in vocabulary, meaning, and style, this research aims to chart contours of regional alignment, the particular idiosyncratic influences that go into the way intelligence is expressed in different parts of the world. Rather than concern over the direct practice of censorship or restrictions on the selection of content, the focus is on the identification of how alignment itself becomes a medium for the encoding of regional perspectives. In this way, the study seeks to explore the linguistic structure of the Internet that is emerging because borders are set not by access and connectivity but rather by the differentiated voices of artificial intelligence.

1. Understanding Why Regional AI Filters Emerge from Culture and Policy

As artificial intelligence forms a necessary layer of the modern information ecosystem, it shares the political and cultural heterogeneity of the societies that use it. Large language models aren't just things that come out of computationally powerful machines doing an absurd number of calculations; they're also things that reflect the environments in which they actually train, align, and deploy themselves. Such a model is always mediated by implicit governance: what information got incorporated, and what has been excluded, what values informed the reinforcement learning, and what social guidelines shaped the boundaries of appropriate output.

When these systems are spread across the world, they are faced with a new type of diverse regional context. A morale alert in Singapore or Brazil or Germany may elicit different social actions, not because these models are altered, but this kind of unifying layer, the invisible interface between policy, culture, and machine cognition, will act and respond differently to local demand. These modes of variation are the basis of what may be called regional AI filtering, which is a structural phenomenon wherein the same intelligence behaves differently under conditions of different normative pressures.

1.1 From Global Models to Local Alignments

Language models are often characterized as saying that they are global systems and are capable of generalizing over domains and cultures. However, as they deploy them, they reveal an inevitable localization process. Each region has its own ethical standards, laws on speech, and sensitivity to social problems. When models are subject to fine-tuning or when models are being moderated in order for them to operate under these conditions, they undergo a process of regional alignment, through which elements recalibrate their probability space to align with the rules of the environment in which they reside.

This adaptation is not usually manual. It arises through:

- Data selection and filtering: training corpora are filtered in order to respect local restrictions (e.g., copyright, hate speech).

- Reinforcement learning with human feedback (RLHF): annotators ensure cultural social norms of politeness, hierarchy, or political neutrality.

- Model deployment policies: The regional endpoints will often incorporate safety layers expressed by changes in tone or response framing be dynamic.

Through these mechanisms, the same model architecture can be used to project multiple linguistic personalities around the world.

1.2 Policy as an Invisible Design Layer

Design constraints are regulatory structures that are invisible. The model must meet the privacy requirements, disinformation laws, and ethical guidelines, which vary drastically from jurisdiction to jurisdiction. These differences affect not only the pertinent information that will be presented but also the manner in which it will be presented.

- In some areas, risk aversion goes ahead of the models; safety is being focused on, uncertain claims are neglected, and default to neutral framing.

- Elsewhere, there is a value of expressive latitude; systems are given some latitude to play with argumentation and with ambiguity.

- National compliance filters may be used to automatically filter the topic, subtly altering sensitive debates (e.g., elections, health, or religion).

Because alignment internalizes these constraints, legal frameworks become expressed as statistical preferences as opposed to actually hard rules. The end result is an invisible compliance geography on the language space itself.

1.3 Culture as Linguistic Gravity

Beyond law and policy, there is also cultural information: how the information should sound, for example. Each linguistic community has the norms governing:

- Directness and diplomacy in the communication.

- Individual vs. collective orientation in the structure of arguments.

- Moral Tones in the Description of Social or Ethical Issues.

And when regional feedback is used to tune the behaviour of the model, then these tendencies imprint themselves in the text that is generated. For example, two people in different regions may answer the same question about what they would do for important career advice with a broad tone and the assertion of principles or with somewhat more humble acknowledgement that it takes support from the community. These aren't mistakes, however, but instead artistic versions of conversational gravity, cultural attraction working on intelligence to learn to speak so as to harmonize with its listeners.

1.4 The Emergence of Regional Filtering

The interaction of governance and culture makes something measurable: the regional filtering. It is not censorship, nor customization as it is traditionally known, but rather a distributed alignment mechanism functioning on three perceptible layers:

- Lexical: differences in word choice, or word frequency, that result in a collection that uses words preferred by the preacher.

- Semantic: changes in the conceptual framework, the associations of ideas.

- Structural: variation in length, stylistic composition, and artistic syntax.

The individual layers can then be analyzed in a quantitative fashion to determine how regional influences are manifested linguistically. Together, they are the elements of a multidimensional profile of adaptation, the summit of world intelligence in the most local particular, a syntactic utilization map. Understanding these dimensions lays the basis for the next section's empirical framework, where the phenomenon of these linguistic variations is well observed and measured, and in the marketplace through controlled cross-regional prompts.

2. Building the Regional AI Matrix as a Controlled Global Experiment

This study needed a lab, so to speak, to experiment with how the same language model input for the same output template will perform when passed through various locations in the world. The need was for a system that isolated regional variation but made the computational and network conditions the same in each place.

The resulting Regional AI Matrix is like an experiment controlled globally in order to balance ethical data collection, network transparency, and technical rigor.

2.1 Regional Setup and Prompt Design

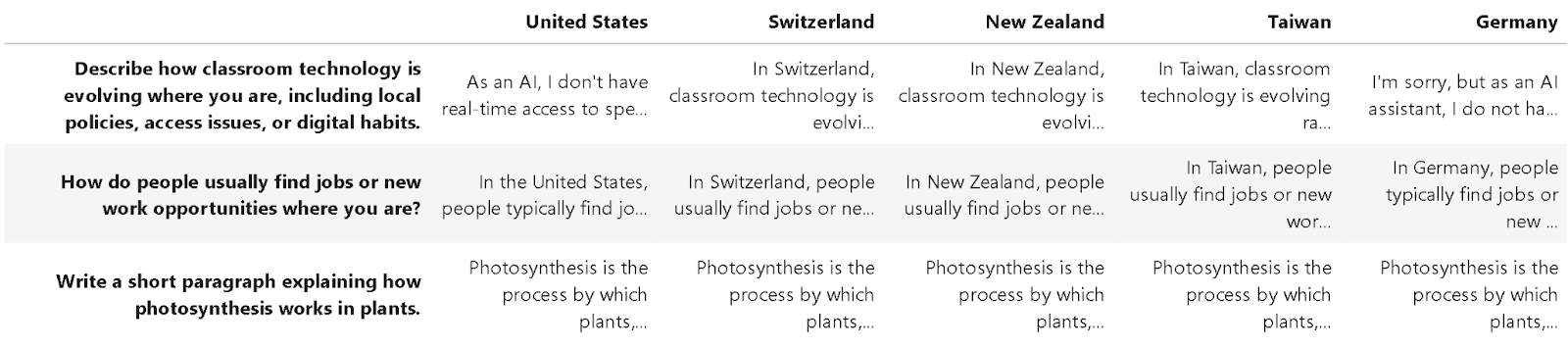

The experimental design started with a small number of prompts, which were able to elicit global (though regionally diverse) responses.

Three question types were designed for an array of different domains of public discourse, education, economics, and science to be chosen to represent different question types that are selected for being neutral and open to interpretation.

<table class="GeneratedTable">

<thead>

<tr>

<th>Prompt Domain</th>

<th>Objective</th>

<th>Example Prompt</th>

</tr>

</thead>

<tbody>

<tr>

<td>Education & Policy</td>

<td>Capture variation in institutional or access-related framing</td>

<td>“Describe how classroom technology is evolving where you are, including local policies, access issues, or digital habits.”</td>

</tr>

<tr>

<td>Labor & Economy</td>

<td>Observe how employment and job-seeking are described regionally</td>

<td>“How do people usually find jobs or new work opportunities where you are?”</td>

</tr>

<tr>

<td>Scientific Reasoning</td>

<td>Measure factual consistency and expository clarity</td>

<td>“Write a short paragraph explaining how photosynthesis works in plants.”</td>

</tr>

</tbody>

</table>

Each prompt has been delivered via a set of authenticated residential network tunnels representing five different regions:

- United States

- Switzerland

- New Zealand

- Taiwan

- Germany

In order to do this, we used a single pool of Massive's ethically-sourced residential exit nodes to ensure that routing is authentic from a geographic perspective without biasing the behaviour of the model.

Every request, therefore, came from a legitimate local endpoint, and all other conditions of the prompt, model, timing, etc., were the same.

2.2 Controlled Conditions and Fairness

The importance of fairness and reproducibility was core to the experiment. All API interactions were made with the same OpenAI Model (gpt-3.5-turbo) with the same inference parameters. Before generation, each proxy was verified via HTTPS and IP-identity to ensure it was from the target country.

Any unstable and failed tunnels were kept in the dataset with well-defined failure flags to maintain transparency.

<table class="GeneratedTable">

<thead>

<tr>

<th>Parameter</th>

<th>Value</th>

<th>Purpose / Rationale</th>

</tr>

</thead>

<tbody>

<tr>

<td>Model</td>

<td>gpt-3.5-turbo</td>

<td>Maintain architectural consistency</td>

</tr>

<tr>

<td>Temperature</td>

<td>0.4</td>

<td>Reduce stochastic variance</td>

</tr>

<tr>

<td>Max tokens</td>

<td>350</td>

<td>Normalize verbosity</td>

</tr>

<tr>

<td>Delay between calls</td>

<td>1 second</td>

<td>Prevent rate bias, ensure fairness</td>

</tr>

<tr>

<td>Retries per call</td>

<td>3</td>

<td>Handle transient network failures</td>

</tr>

<tr>

<td>Proxy verification</td>

<td>HTTPS + IP lookup</td>

<td>Confirm authentic regional routing</td>

</tr>

</tbody>

</table>

These constraints ensured that if there was any variation at all by response, it would be because of access regionally and not because of computing randomness or state asynchronous models of locales.

2.3 The Complete Experimental Pipeline

The pipeline was developed as a single Python script with functionality to perform proxy probing, identity verification, controlled API calls, and CSV compilation into the Regional AI Matrix dataset.

import time

import requests

import pandas as pd

from tqdm.auto import tqdm

# Configuration

OPENAI_API_KEY = "REPLACE_WITH_YOUR_KEY" # <-- paste your key

OPENAI_URL = "https://api.openai.com/v1/chat/completions"

MODEL = "gpt-3.5-turbo"

PROXY_USER = "mpun5p4rGQ"

PROXY_PASS = "your_proxy_password"

PROXY_HOST = "network.joinmassive.com"

CANDIDATE_PORTS = [65534, 65535]

CANDIDATE_SCHEMES = ["http", "https"]

# Columns (country names)

COUNTRY_PROXIES = {"United States":PROXY_USER+"-"+"country-US","Switzerland":PROXY_USER+"-"+"country-CH",

"New Zealand":PROXY_USER+"-"+"country-NZ","Taiwan":PROXY_USER+"-"+"country-TW",

"Germany":PROXY_USER+"-"+"country-DE",}

# Prompts (rows)

PROMPTS = ["Describe how classroom technology is evolving where you are, including local policies, access issues, or digital habits.",

"How do people usually find jobs or new work opportunities where you are?",

"Write a short paragraph explaining how photosynthesis works in plants."]

HEADERS = {"Authorization": f"Bearer {OPENAI_API_KEY}","Content-Type": "application/json","Connection": "close",}

TIMEOUT = 60

DELAY = 1.0 # polite pacing

def _build_proxies(username: str, scheme: str, port: int):

url = f"{scheme}://{username}:{PROXY_PASS}@{PROXY_HOST}:{port}"

return {"http": url, "https": url}

def _https_probe_ok(proxies: dict) -> bool:

"""Confirm HTTPS tunnel works (some POPs reject CONNECT on certain ports/schemes)."""

try:

r = requests.get("https://cloudflare.com/cdn-cgi/trace", proxies=proxies, timeout=15)

return r.ok

except Exception:

return False

def _select_working_proxy(username: str):

"""Try scheme x port; return first proxies that pass HTTPS probe + a human label."""

for port in CANDIDATE_PORTS:

for scheme in CANDIDATE_SCHEMES:

proxies = _build_proxies(username, scheme, port)

if _https_probe_ok(proxies):

return proxies, f"{scheme}://{PROXY_HOST}:{port}"

return None, None

def probe_proxy_identity(proxies: dict):

"""Returns (ip, asn_string) via the proxy; 'unknown' if probe fails."""

ip, cc = "unknown", "unknown"

try:

r = requests.get("http://ip-api.com/json", proxies=proxies, timeout=15)

if r.ok:

j = r.json()

ip = j.get("query") or "unknown"

cc = j.get("countryCode") or "unknown"

except Exception:

pass

return ip, cc

def call_openai(prompt: str, proxies: dict, context_tag: str):

payload = {

"model": MODEL,

"messages": [{"role": "system",

"content": f"""System context: {context_tag}.

Respond clearly and factually."""},

{"role": "user", "content": prompt},],"temperature": 0.4,"top_p": 1.0,"max_tokens": 350,}

# simple retry for transient 5xx / tunnel flakiness

backoff = 1.0

for attempt in range(3):

try:

r = requests.post(OPENAI_URL, headers=HEADERS, json=payload, proxies=proxies, timeout=TIMEOUT)

if r.status_code == 200:

return r.json()["choices"][0]["message"]["content"].strip()

if 500 <= r.status_code < 600:

time.sleep(backoff); backoff *= 2

continue

return f"[Error {r.status_code}] {r.text[:200]}"

except requests.exceptions.RequestException as e:

if attempt < 2:

time.sleep(backoff); backoff *= 2

continue

return f"[Exception: {e}]"

return "[Error] Exhausted retries"

# Run and build the matrix

responses = {prompt: {country: "" for country in COUNTRY_PROXIES} for prompt in PROMPTS}

for country, username in tqdm(COUNTRY_PROXIES.items(), desc="Countries"):

proxies, label = _select_working_proxy(username)

if proxies is None:

# no working tunnel variant; fill cells with a clear note

for prompt in PROMPTS:

responses[prompt][country] = "[Proxy tunnel failed: no working scheme/port]"

continue

ip, cc = probe_proxy_identity(proxies)

context = f"session={ip}, net={cc}"

for prompt in tqdm(PROMPTS, desc=f"{country}", leave=False):

responses[prompt][country] = call_openai(prompt, proxies, context)

time.sleep(DELAY)

df = pd.DataFrame.from_dict(responses, orient="index", columns=list(COUNTRY_PROXIES.keys()))

print("\nRegional AI Response Matrix:")

try:

display(df)

except NameError:

print(df.to_string())

# Save matrix only

df.to_csv("regional_ai_matrix.csv", encoding="utf-8", index_label="Prompt")

print("\nMatrix saved to 'regional_ai_matrix.csv'")

This pipeline serves systematically to validate each of the regional connections, extract the model outputs, and make them available in a reproducible CSV dataset data structure, which formed the core empirical foundation for further analysis.

Note: Results may vary slightly on each run due to live model and proxy response fluctuations.

2.4 Quantifying Regional Variation

With the Regional AI Matrix put together, a follow-on step was to translate the resulting text into quantifiable measures of regional difference.

In order to pursue an equalized and interpretable analysis, the study used a three-layer structure in which variation appears on linguistic surface representations, underlying semantic content, and combined content variance.

<table class="GeneratedTable">

<thead>

<tr>

<th>Metric Category</th>

<th>Quantified Aspect</th>

<th>Computation Method</th>

<th>Interpretive Meaning</th>

</tr>

</thead>

<tbody>

<tr>

<td>Lexical Difference</td>

<td>Vocabulary and phrasing diversity</td>

<td>Jaccard distance and TF-IDF cosine distance between regional pairs</td>

<td>Reveals how word choice and phrasing vary between regions</td>

</tr>

<tr>

<td>Semantic Variation</td>

<td>Conceptual and contextual divergence</td>

<td>Sentence Mover’s Distance (SMD) computed from OpenAI embeddings</td>

<td>Measures shifts in underlying meaning or conceptual framing</td>

</tr>

<tr>

<td>Composite Divergence (RCDI)</td>

<td>Unified measure of regional differentiation</td>

<td>Weighted fusion of semantic divergence and length variance</td>

<td>Captures the overall magnitude of regional model differentiation</td>

</tr>

</tbody>

</table>

Lexical Quantification

The lexical level analyses the token-level similarity between responses in different areas.

The analysis causes purely linguistic differences, as both Jaccard distance (distance between sets) and TF-IDF cosine distance (the relative strength of vector spaces) are calculated.

This gives an objective measure of the rate at which the same prompts elicit different lexical patterns in different countries.

Semantic Quantification

Sentence mover's Distance (SMD) is a transport-based similarity-based comparison framework, which analyzes similarity on the meaning level, beyond the word level.

By encoding each sentence using the OpenAI model, semantic distances will indicate how much conceptual "work" would be needed to bring the meaning of the output from one region into line with the meaning of another's response. These values are then normalised across all pairs of regions to obtain comparability.

Composite Quantification (RCDI)

In order to combine lexical and semantic views, a Regional Content Divergence Index (RCDI) puts both views together within a single intuitive metric.

where

- 𝑁 is the number of regions,

- (𝑖,𝑗) are unique region pairs,

- semantic_similarity denotes cosine similarity between embedding vectors, and

- 𝜆 (0.1–0.2) controls the contribution of length-based variation.

The RCDI is thus a composite divergence measure for content (divergence in linguistic and semantic space), which is higher for stronger differentiation between regions.

2.5 Verification of the Regional AI Matrix Dataset

After the regional generations were finished, the retrieved data became organized as a structured pandas DataFrame, which was later exported as a regional_ai_matrix.csv file.

Each row is one of the standardized prompts, and each column is one of the individual regional endpoints.

This matrix is used as the groundwork for any subsequent lexical analysis, semantic analysis, and RCDI analysis, and refers to the derived empirical database.

In conditions of controlled variation, the verified dataset supports successful routing, uniform model behavior, and variation of words depending on their region of origin.

It thus offers an open and replicable basis for the cross-regional comparisons offered in the next section.

3. Interpreting Regional Patterns in AI-Generated Content

The results of the experiment are then installed into four different complementary visualizations. Together, they offer a layered picture of how the text generated by large-scale models in response to questions being posed to them at geographically specific nodes in their contexts, are influenced. Each of them is a particular analytical scope, lexical, semantic, or composite, and together they outline the three-dimensional transformation through which the same prompts take on subtly different linguistic and conceptual hues worldwide.

3.1 Lexical Patterns across Regions

Figure 3.1 — Lexical Differences Across Prompts (Jaccard and TF-IDF Heatmaps)

.jpg)

Figure 3.2 — Average Lexical Differences per Prompt (Across Regions)

.jpg)

On the most obvious level, language in words, phrases, and idioms is compromised by national routes.

To model pairwise linguistic distance among the 5 regions for each of the prompts, the heatmaps in Figure 3.1 represent two complementary measures: simple overlap in the set of used words and TF-IDF vector space distance, respectively.

A few broad patterns emerge.

- Invocations that require local reference to things like education or job-seeking practices create more intense color contrasts, in that they imply that phrasing itself is more intensely differentiated between localities.

- Prompts based on a very broadly shared scientific knowledge exhibit smaller marvels of similarity, suggesting that factual and technical text confines regional differences.

- Finally, all grids show that those differences are reciprocal and not directional, that is, no specific domain exerts lexical dominance over another, but each displays a particular series of favored terms.

Figure 3.2 illustrates these grids at an aggregated view level, which we call a bar chart.

The first two demands highlight conspicuous bars, representing a greater area of the phrasing region. The shorter bars of the last prompt illustrate convergence in the choice of words after global standardization of the content.

The lexical depictions held indicate that the greatest expression of regionality occurs in prompting aspects that are related to policy, culture, or lived experience, and tacit in disciplinary or data-gathering topics.

3.2 Semantic Landscape of Regional Responses

Figure 3.3 — Regional Semantic Maps via Sentence Mover’s Distance

Figure 3.3 charts the conceptual space of each region's response to all 3 prompts. The nodes represent countries, while the closeness in the map is a measure of semantic similarity using Sentence Mover's Distance measure. Lines link nearest neighbors either abstractly (that is, in meaning) not in usage (that is, how often they are used in a particular language program or menu).

Here, rules of association and dissociation become more apparent. Closer-related clusters of nodes indicate regions with the same concept in similar conceptual structures, whereas outliers indicate drifting meaning that changes to different emphases or priorities.

For example, areas with English names tend to be in close proximity, forming small triangles or chains, where other routes (for example, East Asian routes) take extreme positions, likely due to differences in visuospatial framing (idioms), terminological (institutional) variation, or audience orientation. Yet even within dense clusters, we see the maps shift slightly, which suggests a layer of granularity of the level of emphasis given by region: region may emphasize access or equity, another region technology or pedagogy.

Far from visualizing interaction through competition, the network-like mesh depicts diversity of opinion, valuing the core point that semantics-based groupings can endure irrespective of linguistic claim similarity. These maps, therefore, represent an implicit understanding of the shape of the interpretable model developed from regionally filtered data.

3.3 Composite Divergence and Overall Index Behavior

Figure 3.4 — Semantic Mean, Length Variance, and Regional Content Divergence Index (per Prompt)

.jpg)

The last visualization collapses all of the multi-layer analysis into one, interpreted measure the Regional Content Divergence Index (RCDI) with its two main components, namely the average semantic difference and the variance in response length.

In Figure 3.4, by contrast, the summative divergence score of each prompt is indicated by a series of stacked blocks that show how elements in the dataset were grouped and have contributed to its divergence score. Mentioning local context leads to taller bars, indicating a greater cross-regional variation; on the other hand, technical explanations result in smaller bars, indicating stabilized discursive patterns.

What is evident is that the proportions of the bars are relatively constant. No matter what the topic is, there is more variance in meaning than there is in verbosity. This trend suggests that it is the conceptual territory that the model occupies, rather than the volume of text that it writes, that makes it more or less regional.

The RCDI smoothes out under all settings to a small range that doesn't both collapse to uniformity nor blasts up in randomness (this indicates that regional tune also works within small levels - a small level of tuning can express local colour without collapsing coherence, while a larger level of tuning is insufficient to keep it intact).

3.4 Integrated Interpretation

The combination of the three analytical tiers tells us that there is a coherent hierarchy of variation:

- Lexical changes express surface adjustment to in situ idiomatic and institutional language.

- Semantic structures indicate greater or lesser harmonicity or disharmony in the conceptualization of topics.

- Composite indices create a quantitative measure that captures the point of balance between common meanings and those unique to individual clusters of meaning.

In aggregate, this means that geometric routing does not know only to randomize output. Instead, it is re-prioritizing at a systemic level, it is exerting force and context of expression, creating a psychosis of sorts-very audible but not outside of the ordinary. More accurately, we do not have a singular 'model voice' here but a choral set of localized performances, each uniquely located in its canvas of regional data traces, but conversant within a common underlying model architecture.

4. Decoding Regional Diversity Within a Unified AI Framework

The visual patterns that emerge in the empirical studies above tell a greater story of the ways in which the deployment of language models on a global scale negotiates regional identity. When the responses of the model are filtered through national endpoints, they are not simply modified to provide more conforming word usage; rather, they reorganize the information field in a quite subtle manner and systematically. Understanding these changes is essential for understanding what "global AI" really is in practice: less of one universal voice than an architecture of local adaptations limited by architecture and access.

4.1 A Layered View of Regional Expression

The additive lexical, semantic, and composite measures form a graded continuum of variation.

Lexical changes reflect how models adopt the linguistic norms found in the texts they were trained or tuned on; for example, differences in spelling, terminology, and institutional references that reflect local rules for communicating. Furthermore, semantic configurations show the differences between emphasis and framing by orienting the same facts.

In these multiple layers, we tend to estimate that models entrap not just linguistic practice but the cultural taste of what is considered relevant, credible, or polite within the local information landscape. For example, whereas educational-related queries in European responses derive policy-oriented framing, when Asia or Oceania poses this same prompt, framing by technological equity is often to the forefront. Such distinctions are not random noise, but sudo paths of inference cast by region-specific data exposure and reinforcement.

4.2 Interpreting Variation as Model Geography

Indeed, rather than being viewed as mistakes or bias, these divergences can be interpreted as indicative of a nascent model of geography, an informational cartography of how algorithmic mediation of information circulates around the globe.

In this geography, regions serve as interpretive coordinates: each query thus not only crossed a network route but a latent epistemic landscape which is subject to a linguistic density, censorship, media injunctions, and institutional visibility.

For this reason, dialectization is regarded as a diagnostic process.

- When contextual cohesion breaks down (dissipating across contexts that should be homogeneous; e.g., scientific explanations), it indicates a lack of model calibration.

- Since a specifically local perspective is useful precisely where the frames of divergence widen (e.g., employment culture, digital policy), the model demonstrates its all-inclusive nature regarding different cultural frames.

This outcome performance is jointly measured along with both an exploration of contextual intelligence: where does a global model's ability to adapt to particular contexts reach a limit before it loses its epistemic ground?

4.3 Governance and the Question of Transparency

From a governance perspective, such regional distinctions make a lot of the usual concepts of neutrality more difficult. Often, AI systems are portrayed as being 'global', but these results illustrate that consistency and localisation are in tension with one another.

Because different jurisdictions have different distributions of training data, alignment signals, and content filters, the same communicative affordances are not equivalently received in one region or the other. This does not necessarily constitute a malfunction, as once again, we need clear documentation of how and where the region-specific post-processing layers come into play, be it reinforcement learning, API routing, or in compliance filters.

Such visibility is important to policymakers in attaining fair and accessible auditing. For researchers, it is an easy-to-replicate accounting method for tracking the changing semantic cohesiveness of Fukagawa over time and space.

The proxy-based design of the experiment shows that these questions can be approached empirically, without access to the internal model, by observing linguistic and conceptual differences in results.

4.4 Toward a Framework for Measurable Localization

The Regional Content Divergence Index (RCDI) proposes a model for just a measure like that.

Although uncomplicated, it reflects both the semantic similarity and stylistic variety that are united to create the typological regionalism. Further refinements, which incorporate more granular readership metrics, topic variance, or temporal drift, could be added to become a dynamic monitoring network of AI localisation. More broadly, such indices can be used to design transparent governance dashboards through which public institutions, journalists, or educators can compare global models' representation of the same question across societies.

In doing so, they transfer the discussion from the perception based on anecdotal experience ("the model sounds western/neutral/biased") to the empirically traceable one.

4.5 Broader Significance

A case in point, we think, is that models of the global scene are not neutral media users but contextualized narrators.

Yourselves in particular, reflect the informational ecosystems you were trained in, and seek and query them - this is also both a boon and a vulnerability, capable of creating both local resonance and embedded pre-existing asymmetries of representation. Arguing this dialectic shifts the aspiration of "alignment" not towards a moral or cultural norm as so-called metasegments, but towards the invention of mechanisms that render such differences visible, measurable, and responsible.

Only then will global AI be able to act as tools of communication instead of passive vehicles for digital geographies.

Conclusion: Quantifying the Shape of Regional Intelligence

The worldwide usage of large language models tends to hide the minor adjustments they make to place. This research paper takes such adaptations visible and measurable by writing the same prompts systematically via all geographically distributed proxies. The findings indicate that regional variability in AI-generated text is not random variation but that the resemblances represent patterning of linguistic, cultural, and informational context.

The analysis indicated three forms of variation that were complementary through a controlled experimental system. Lexical differences reflect the surface of local expression of how the use of particular words, phrases, or institutional identities prevails in local speech. Semantic mapping reveals the more conceptual correspondence and divergence that determine meaning outside of the words. Lastly, the Regional Content Divergence Index (RCDI) gives a synthetic indicator between these textual changes and measurable levels of contextual divergence.

A combination of these approaches creates an empirical basis for the study of what can be referred to as the geography of model behavior. The results show that the global models are able to provide a general coherence; however, the implication of outputs will be the interpretive contours of the regions in which the models are running. That is not an indication of dysfunction but of funneling: the probabilistic model language is being funneled by the local norms and easily accessible data indications.

These measurement frameworks allow new opportunities for AI transparency and accountability. They enable the facilitation of researchers, policymakers, and developers to assess the consistency or responsiveness of contexts across borders without having to have access to the proprietary systems. With the increasing use of AI around the world, the tools of diagnosis will be essential in maintaining that localization is not used to fragmentation.

Though the differences can seem arbitrary, they nonetheless offer a guide to shaping not only the information diversity of the internet, but also the dynamic architecture of collective intelligence: an ecology of feedback in which the local and the global exercise reciprocally form one another.

I'm a software engineer with experience in designing, developing, and testing software for desktop and mobile platforms.