Introduction: Mapping Consumer Voices Across Borders

In the global village of digital commerce, a product can spread with thousands of unique reputations around the world. Online shopping platforms like Walmart, Amazon, and Flipkart are a mirror to the ways in which people from different cultures value and express things in words. And even if the product is the same (as is the case with the Galaxy S25 Ultra, a flagship device sold all over the world), the tone, vocabulary, and emotion of its reviews differ vastly from one country to another. Each marketplace, while detailing the same hardware, exposes a distinct narrative of cultural narrative, aspiration, and/or satisfaction.

Global branding is meant to homogenize perception, but a brand's sentiment remains famously local. What at one place of the world may get a five-star, at another place, this could be due to technical efficiency, at yet another, it may represent social status or affordability. The economic situation, the linguistic culture, and the consumer predispositions interact to define not just the quality of product experience but the way in which this experience is given a verbal structure. This distinguishes culture-dependent normativity and bias, subtle but important in transduction, concerning the ways in which business people, linguistic scholars, and researchers aim to use digitalized speech in ways that will be culturally appropriate.

This research establishes a multi-country "review lens", that is, a structured way of comparing human-made reviews of the same product across borders. Using validated data on the marketplaces of Canada, the United States, and Mexico, the analysis investigates how geography affects the language of a consumer's feedback. Based on patterns of sentiment, wording, and stress, the study aims to understand how the Galaxy S25 Ultra, a global technological device, is translated into local, everyday usage.

At its heart, this research proposes the following question: How does geography affect the tone, words used, and even polarity of online consumer expression?

Building upon the previously published work in the field of AI response analysis for the regional cluster, in this project, the focus shifts to human language in the wild, spontaneous voices of actual buyers, describing their experiences not as high-resolution data items, but as lived realities embedded in location, value systems, and language.

1. Why National Markets Speak Differently

%20(Part%201).jpg)

1.1 Cultural Economics of Feedback

Consumer reviews are (to some extent) public, cultural performances as much as they are evaluations of the product. Each opinion is not considered on its own as a solitary complaint written by a disgruntled consumer; it is also part of the social and economic ecosystem that creates the fraud world and affects its availability to individuals. There are, of course, three drives that can take up an especially strong position:

- Price Sensitivity and physical risk: In areas where high-end technology is a big financial investment, reviews tend to be ones of gratitude or caution. A Canadian buyer might extol "value for money," whereas a Mexican buyer could call the purchase "worth the wait"—both using an economic context to evaluate the purchase favorably.

- Belief in retail institutions: Strong Consumer Protected Countries: Reviewers prove to be more assertive in their opinion ("The Galaxy S25 Ultra performs perfectly" In areas of more variable post-sale reliability, tone becomes more nice or narrative ("it is good as of now, fingers crossed it is here for long").

- Cultural norms for communication: Some cultures favor directness, steering precision, and somewhat factual comments such as "Excellent camera, fast processor." Still others prefer indirectness, kinder and gentler criticism through hedges such as "Could be improved," "I expected a little more." The linguistic preferences indicate a general tendency of the speaker: assertive vs. deferential, individual vs. collective linguistic expression."

Thus, individual product review turns into a miniature of the national discourse, manipulated on the boundaries of economy, cultural pragmatism, and expectations of what is implied by honesty should sound like.

1.2 The Digital Platform as a Mirror of Local Expectations

Global retail ecosystems, such as Walmart, vary under a single brand and multiple realities. Each country-specific website (U.S., Canada, Mexico) has its own UX design, language register, price presentation, and moderation, AI tool policy according to the local conditions.

- Localized Interface: Things such as currency, installation options, and delivery promise affect pre-review expectations.

- Regional moderation and language policies: Each platform has a different policy regarding the balance between openness and civility, guiding what form of feedback will pass through moderation.

- Contextual pricing: Even when the same phone is priced at $1,299 in U.S. dollars, a significantly different emotional response is triggered if you see that same phone serving as a local analog version that costs 300 or 400 CAD, or some local equivalent in the local currency.

When reviews come out under these conditions, they are a mirror of not just the product, but of expectation localized to it.

The trends found from previous analysis include:

- United States: Because of efficiency, short and somewhat hasty remarks such as "Great phone, ultra-fast," or "Battery could last longer."

- Canada: Phased and balanced: "I like that they delivered quickly, very satisfied overall."

- Mexico: Emotionally expressive and story-linked reviews, "My husband loved it, finally upgraded after years!"

Together, these tonal differences constitute what one can call "national sentiment fingerprints"—recurring linguistic textures that stamp such societies' understanding of what qualifies as value and satisfaction in commerce.

1.3 Global Product, Local Meaning

The Samsung Galaxy S25 Ultra is a perfect case study of the acquisition of local meaning by global products. Technically, the same, but the one represented a different thing in different countries:

- United States: A status tech for progress, performance, and lifestyle.

- Mexico: The dream, bought with pride, a story of success and modernity.

- Canada: Strength in reliability, respected through practicality and cost-performance ratio

These coefficient changes highlight the different cultural values that buyers assign to the same specifications of AI camera, display brightness, or battery life:

- In some markets, technological superiority is admired as innovation.

- Others have the discourse led by affordability, lifetime, or social value-added.

Thus, linguistic indicators in reviews are not random noise, but are organized depictions of cultural values.

The result is the central hypothesis, which is:

- Cultural Frames in User Reviews: The text reveals that the dialogic strategy of reviews shifts not according to products' innovative properties, but rather because of the value framing of the culture.

This has been empirically tested in a subsequent analysis examining the question of which potential factors make the reputation of a phone translate into multiple social narratives: geographic and linguistic diversity.

2. Building the Multi-Country Review Lens

%20(Part%202).jpg)

A consistent and transparent experimental framework was required in order to uncover how real buyers from different countries describe the same product. Therefore, the "multi-country review lens" was developed as a controlled global pipeline, which can collect, clean, and harmonize review text from different geographical regions in an ethical and technically rigorous manner.

2.1 Data Sources and Target Product

The study used three geographically different but structurally similar retailers (Walmart Canada, Walmart USA, and Walmart Mexico) with each containing verified buyer reviews of the same product (Samsung Galaxy S25 Ultra).

Each regional site had basically the same HTML layout, while country-specific layers of localisation were added to the site, such as currency, delivery methods, and language encoding. Reviews were also scaled over hundreds of paginated pages, which demanded both network accuracy and driven ad-hoc parsing, which maintained desired coverage.

<table class="GeneratedTable">

<thead>

<tr>

<th>Country</th>

<th>Platform Endpoint</th>

<th>Approx. Pages</th>

<th>Language</th>

</tr>

</thead>

<tbody>

<tr>

<td>Canada</td>

<td><a href="https://www.walmart.ca/en/reviews/product/5MTTQST5PA9U">https://www.walmart.ca/en/reviews/product/5MTTQST5PA9U</a></td>

<td>~421</td>

<td>English</td>

</tr>

<tr>

<td>USA</td>

<td><a href="https://www.walmart.com/reviews/product/14916573729">https://www.walmart.com/reviews/product/14916573729</a></td>

<td>~1000</td>

<td>English</td>

</tr>

<tr>

<td>Mexico</td>

<td><a href="https://www.walmart.com.mx/opiniones/productos/00880609706379">https://www.walmart.com.mx/opiniones/productos/00880609706379</a></td>

<td>~332</td>

<td>Spanish</td>

</tr>

</tbody>

</table>

Each page had a JSON arrangement (customerReviews or reviews) included in the body of the HTML. Information obtained from these fields was then labeled, normalized, and unified in a multinational multi-lingual framework, constituting empirical data of the present study.

2.2 Network Infrastructure and Ethical Routing

To make the local access authentic, the pipeline included Massive’s residential proxies, which deliver traffic via the real device in the targeted country.

This guaranteed that the right data was reused, which was a local shopper's, not a cross-border, cached, or filtered view.

The routing layer key principles are as follows:

- Authenticity: Each request was located geographically in the appropriate marketplace country

- Compliance: Data collection was done on publicly visible review data, excluding personal identifiers.

- Ethical safeguard: Output Data was anonymized, keeping only country, review, and rating columns.

Combating the visibility boundaries of this platform and adherence to research ethics, a culturally legitimate approach to accessing data was used.

2.3 Harvesting Architecture

The collection layer is a modular Python pipeline that simulates each market a local shopper in that market, which will crawl through each review page, strutting in deterministic appendage counts. It combines lightness of being able to read the data using the HyperText Transfer Protocol (for speed and portability) with robustness (in case of CDN) to retrieve the same data again (fallbacks).

A short description of the work of the harvester:

- Localized transport: Per country residential proxy paths (Canada, USA & Mexico) with an attached conservative browser-like header profile.

- Resilient fetching: Limits retries, back-off exponentially, and allows non-200 responses (e.g., 403/451/456) to pass through, allowing parsers to continue to mine inline state.

- Dual HTML+JSON parsing: Searches for review payloads in 3 locations:

- JSON-LD blocks,

- key entities like customerReviews / reviews, and

- preloaded state blob files (i.e. __WML_REDUX_INITIAL_STATE__).

- Normalization: Collapses all sources to the minimal schema country | review | rating with typed ratings (1–5).

- Pagination discipline: Iterates pages in order with empirically measured threshold upper limits (CA = 421, US = 1000, MX = 332) using both a randomized inter-page delay (0.4-0.9s) to cause politeness.

- De-duplication: Items were filtered by (review_prefix, rating) key and empties were removed.

- Translation pass: Mexico reviews are translated to English (batched) to maintain (from a language analysis perspective) consistency of language while preserving the intended sense.

- Deterministic output: Generates one CSV and an in-memory DataFrame to be used in subsequent sentiment and lexical analytics.

import re, json, time, html, random, typing as T, requests, pandas as pd

from bs4 import BeautifulSoup

from tqdm.auto import tqdm

from urllib.parse import urlparse, urlunparse, urlencode, parse_qs

# Configuration

HEADERS = {"User-Agent": ("Mozilla/5.0 (Windows NT 10.0; Win64; x64) "

"AppleWebKit/537.36 (KHTML, like Gecko) "

"Chrome/119.0.0.0 Safari/537.36"),

"Accept-Language": "en-US,en;q=0.9",

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,*/*;q=0.8",

"Connection": "close",

"Referer": "https://www.google.com/",

}

TIMEOUT = 35

RETRIES = 2

BACKOFF = 1.25

# Replace the placeholders below with your real Massive proxy credentials and host.

MASSIVE_USER = "your_proxy_userid"

MASSIVE_PASS = "your_proxy_password"

HOSTPORT = "network.joinmassive.com:65534"

# If you want to disable proxies temporarily, set USE_PROXIES = False

USE_PROXIES = True

# Build PROXIES dict programmatically using placeholders

if USE_PROXIES:

PROXIES = {"CA": f"http://{MASSIVE_USER}-country-CA:{MASSIVE_PASS}@{HOSTPORT}",

"US": f"http://{MASSIVE_USER}-country-US:{MASSIVE_PASS}@{HOSTPORT}",

"MX": f"http://{MASSIVE_USER}-country-MX:{MASSIVE_PASS}@{HOSTPORT}",}

else:

PROXIES = {}

SITES = [{"country": "Canada","cc": "CA","url": "https://www.walmart.ca/en/reviews/product/5MTTQST5PA9U?sort=submission-desc",

"source": "Walmart Canada",},

{"country": "USA","cc": "US","url": "https://www.walmart.com/reviews/product/14916573729?sort=submission-desc",

"source": "Walmart USA",},

{"country": "Mexico","cc": "MX","url": "https://www.walmart.com.mx/opiniones/productos/00880609706379?sort=submission-desc",

"source": "Walmart Mexico",},]

# Targets & limits

TARGET_MIN_REVIEWS_PER_SITE = 200

MAX_PAGES_PER_SITE = 30 # kept for fallback if total unknown

DELAY_BETWEEN_PAGES = (0.4, 0.9) # seconds (min, max)

# Known page counts (upper bounds for sequential crawl)

PAGES_TOTAL = {"CA": 421, "US": 1000, "MX": 332}

# HTTP Helpers

def get_proxies(cc: str) -> T.Optional[dict]:

if not USE_PROXIES:

return None

p = PROXIES.get(cc)

if not p:

return None

return {"http": p, "https": p}

def robust_get_text(url: str, cc: str, max_retries: int = RETRIES) -> T.Optional[str]:

last_err = None

for attempt in range(1, max_retries + 2):

try:

prox = get_proxies(cc)

r = requests.get(url, headers=HEADERS, timeout=TIMEOUT, proxies=prox)

if r.ok:

return r.text

if r.status_code in (403, 404, 451, 456):

return r.text

last_err = f"HTTP {r.status_code}"

except Exception as e:

last_err = repr(e)

time.sleep(BACKOFF * attempt + random.random()*0.6)

tqdm.write(f"[WARN] Failed fetching {url} ({last_err})")

return None

# JSON Utilities

def norm_text(x: T.Any) -> str:

if x is None: return ""

return x.strip() if isinstance(x, str) else str(x).strip()

def safe_get(d: T.Any, *path, default=None):

cur = d

try:

for p in path:

if isinstance(cur, list) and isinstance(p, int):

cur = cur[p]

elif isinstance(cur, dict):

cur = cur.get(p)

else:

return default

return cur if cur is not None else default

except Exception:

return default

def extract_json_ld(soup: BeautifulSoup) -> T.List[dict]:

out = []

for tag in soup.find_all("script", {"type": "application/ld+json"}):

txt = (tag.string or tag.get_text() or "").strip()

if not txt: continue

try:

data = json.loads(txt)

out.extend(data if isinstance(data, list) else [data])

except Exception:

pass

return out

def greedy_find_json_arrays(html_text: str, keys=("customerReviews","reviews","review")) -> T.List[dict]:

results = []

for key in keys:

for m in re.finditer(rf'"{key}"\s*:\s*\[', html_text):

start = m.end() - 1 # '['

depth, end = 0, start

while end < len(html_text):

ch = html_text[end]

if ch == '[': depth += 1

elif ch == ']':

depth -= 1

if depth == 0:

arr_str = html_text[start:end+1]

try:

arr = json.loads(arr_str)

results.append({"key": key, "data": arr})

except Exception:

try:

arr = json.loads(html.unescape(arr_str))

results.append({"key": key, "data": arr})

except Exception:

pass

break

end += 1

return results

def extract_assigned_json_objects(html_text: str) -> T.List[dict]:

patterns = [

r'window\.__WML_REDUX_INITIAL_STATE__\s*=\s*(\{.*?\});',

r'window\.__PRELOADED_STATE__\s*=\s*(\{.*?\});',

r'__NEXT_DATA__\s*=\s*(\{.*?\});',

r'window\.__INITIAL_STATE__\s*=\s*(\{.*?\});',

r'window\.__APP_INITIAL_STATE__\s*=\s*(\{.*?\});',

]

out = []

for pat in patterns:

for m in re.finditer(pat, html_text, flags=re.DOTALL):

blob = m.group(1).strip()

try:

out.append(json.loads(blob))

except Exception:

try:

out.append(json.loads(html.unescape(blob)))

except Exception:

# naive brace-balance

try:

i = blob.find('{')

if i >= 0:

depth, j = 0, i

while j < len(blob):

if blob[j] == '{': depth += 1

elif blob[j] == '}':

depth -= 1

if depth == 0:

out.append(json.loads(blob[i:j+1] ))

break

j += 1

except Exception:

pass

return out

# Review Normalization (only country, review, rating)

def normalize_review_item_min(item: dict, country: str) -> dict | None:

text = norm_text(

safe_get(item, "reviewText") or

safe_get(item, "review") or

safe_get(item, "text") or

safe_get(item, "reviewBody") or

safe_get(item, "body") or

safe_get(item, "description")

)

if not text:

return None

rating = (

safe_get(item, "rating") or

safe_get(item, "ratingValue") or

safe_get(item, "reviewRating", "ratingValue")

)

try:

if rating is not None and str(rating).strip() != "":

rating = int(round(float(rating)))

else:

rating = None

except Exception:

rating = None

return {"country": country, "review": text, "rating": rating}

# Inline Parser

def parse_walmart_inline_reviews_min(html_text: str, *, country: str) -> T.List[dict]:

soup = BeautifulSoup(html_text, "html.parser")

out: list[dict] = []

# 1) JSON-LD review arrays

for obj in extract_json_ld(soup):

revs = obj.get("review")

if isinstance(revs, list):

for item in revs:

norm = normalize_review_item_min(item, country)

if norm: out.append(norm)

if out: return out

# 2) Named arrays

for blk in greedy_find_json_arrays(html_text, keys=("customerReviews", "reviews")):

arr = blk["data"]

if isinstance(arr, list):

for item in arr:

norm = normalize_review_item_min(item, country)

if norm: out.append(norm)

if out: return out

# 3) Preloaded state blobs

for obj in extract_assigned_json_objects(html_text):

queue = [obj]

while queue:

cur = queue.pop(0)

if isinstance(cur, list):

for v in cur:

if isinstance(v, (dict, list)): queue.append(v)

elif isinstance(cur, dict):

for k, v in cur.items():

if isinstance(v, list) and k in {"customerReviews", "reviews"}:

for item in v:

norm = normalize_review_item_min(item, country)

if norm: out.append(norm)

if isinstance(v, (dict, list)):

queue.append(v)

return out

# Pagination & Harvester

def with_page(url: str, page: int) -> str:

"""Return url with page=n (preserve existing query)."""

pr = urlparse(url)

q = parse_qs(pr.query)

q["page"] = [str(page)]

new_q = urlencode({k: (v[0] if isinstance(v, list) else v) for k, v in q.items()}, doseq=True)

return urlunparse(pr._replace(query=new_q))

def harvest_site(site: dict) -> list[dict]:

country = site["country"]

cc = site["cc"]

base_url= site["url"]

rows: list[dict] = []

seen = set()

# Sequential page order starting from page 1 (no random sampling)

total_pages = PAGES_TOTAL.get(cc, MAX_PAGES_PER_SITE)

page_pool = list(range(1, total_pages + 1))

# tqdm progress bar per country

pbar = tqdm(page_pool, desc=f"{country}", leave=True)

for page in pbar:

page_url = with_page(base_url, page)

html_text = robust_get_text(page_url, cc)

if not html_text:

tqdm.write(f"[WARN] No HTML for {country} page {page}")

continue

chunk = parse_walmart_inline_reviews_min(html_text, country=country)

added = 0

for r in chunk:

key = (r.get("review","")[:120], r.get("rating"))

if key in seen:

continue

seen.add(key)

rows.append(r)

added += 1

# update bar postfix instead of printing

pbar.set_postfix_str(f"page={page} +{added} total={len(rows)}")

if len(rows) >= TARGET_MIN_REVIEWS_PER_SITE:

break

time.sleep(random.uniform(*DELAY_BETWEEN_PAGES))

pbar.close()

return rows

def harvest_walmart_ca_us_mx():

frames = []

for site in tqdm(SITES, desc="Walmart Pagination Scrape"):

rows = harvest_site(site)

df_site = pd.DataFrame(rows, columns=["country","review","rating"])

frames.append(df_site)

df = pd.concat(frames, ignore_index=True) if frames else pd.DataFrame(columns=["country","review","rating"])

# --- translate Mexico reviews to English (overwrite 'review' for Mexico only)

try:

from googletrans import Translator

import inspect, asyncio

translator = Translator()

mask = (df["country"] == "Mexico") & df["review"].notna() & (df["review"].astype(str).str.strip() != "")

if mask.any():

tqdm.write("Translating Mexico reviews to English...")

idx = df.index[mask]

texts = df.loc[idx, "review"].astype(str).tolist()

translations = []

BATCH = 50

pbar_t = tqdm(total=len(texts), desc="Mexico Translation", leave=True)

for i in range(0, len(texts), BATCH):

batch = texts[i:i+BATCH]

res = translator.translate(batch, src='auto', dest='en')

# handle async googletrans variants

if inspect.isawaitable(res):

try:

import nest_asyncio

nest_asyncio.apply()

loop = asyncio.get_event_loop()

res = loop.run_until_complete(res)

except Exception:

res = asyncio.run(res)

if not isinstance(res, list):

res = [res]

translations.extend([getattr(r, "text", str(r)) for r in res])

pbar_t.update(len(batch))

time.sleep(0.6 + random.random()*0.4) # gentle throttle

pbar_t.close()

df.loc[idx, "review"] = pd.Series(translations[:len(idx)], index=idx)

except Exception as e:

tqdm.write(f"[WARN] Translation step skipped/failed: {e}")

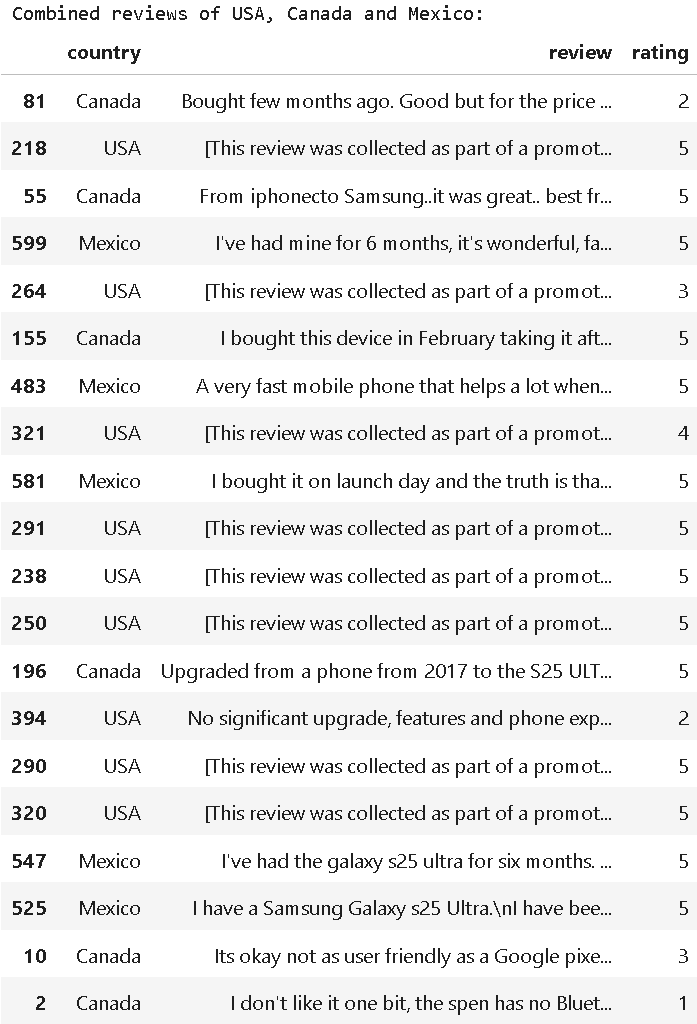

print("\nCombined reviews of USA, Canada and Mexico:")

# show random 20 rows of resultant df

display(df.sample(n=min(20, len(df)), random_state=42))

print(f"Total rows: {len(df)}")

# Save combined CSV only (Mexico reviews are now English)

df.to_csv("walmart_reviews_ca_us_mx.csv", index=False)

print("Saved -> walmart_reviews_ca_us_mx.csv")

return df

# Run

df_reviews = harvest_walmart_ca_us_mx()

From this joined-up pipeline, between 600-650 reviews were automatically mined from the three countries, which would later be used as a foundation for comparative linguistic analysis.

2.4 Quantifying Collected Reviews

After constructing the cross-language review dataset (country, review, rating), the second step of the analysis was the transformation of unstructured review data into meaningful and comparative signals. The characteristics were quantitatively explored by Sentiment Distribution Analysis, Lexical Frequency Visualization, and Index-Based Metrics to convert the numerical score and linguistic expression into cross-country insights on consumers.

Sentiment Distribution Analysis (SDA)

The initial level of quantification involved assigning the sentiment polarity to each review according to its review value.

- Ratings that were 4-5 were defined as Positive, 3 as Neutral, and ratings 1-2 as Negative.

- This classification gave a proportional value of the level of user satisfaction in each country.

The sentiment distribution made it possible to make a structured comparison in terms of differing manifestations of optimism, neutrality, or lack of satisfaction between markets. For example, certain parts of the world had stronger positivity bias, while others had higher variance, which implies divergent differences in cultural threshold for "satisfaction."

This underlying segmentation transformed the numerical data into emotionally interpretable signals, which served as the basic framework for further higher-level textual and quantitative analysis.

Lexical Frequency Graphical Visualizations

Besides ratings, the text of reviews is loaded with the ways that users frame their experiences.

To represent such linguistic tendencies, each text corpus for each country was tokenized and divided into positive and negative sub-corpora. Frequency counts were taken on each sub-section, and high-frequency words were identified to determine common product-related terms.

This frequency mapping offered qualitative depth by uncovering the major narrative elements that consumers were talking about over and over. For example:

- Mexico used expressive words such as camera, photo, and excellent, which indicated a sense of enthusiasm about the image possibilities.

- USA reviews range between galaxy, ultra, love, and the negative words trash, failure, and sound.

- Canada used a dual mode of writing with functional references, such as pen, battery, as well as marketing expressions, such as collected parts.

By analyzing the word frequency in each brand, language itself became another measure, revealing the role of culture and communication style in the perception of products.

Index-Based Metrics

To summarize the number and the linguistic indicators into quantitative homogeneous values, three indices were developed for each market:

- Satisfaction Index (SI): Percentage of 4-5 star reviews, measuring overall positive feedback.

- Positivity Index (PI): Normalized average rating: Normalized: (scale: 0-100%), emotional tone, character of the text (positive/negative).

- Engagement Index (EI): Relative review participation rate of the market to describe the intensity of engagement.

Together, these indices constituted a relative metric system that was able to describe how happy, how positive, how participatory each country's consumer base was. They created an algorithmic numerical gauge through which the sentiments and involvement of a wide-ranging region could be consistently translated.

2.5 Consolidated Review Dataset

The multi-country scraping pipeline was able to generate a clean and standardized dataset of customer reviews for the Samsung Galaxy S25 Ultra from Walmart Canada, the US, and Mexico. Each review entry had three harmonized review fields: country, review, and rating, which can be used for a direct cross-regional comparison.

All records were first normalized, cleaned of duplicity, and translated (Mexican reviews into English). Promotional phrases like boilerplate were removed in order to retain only genuine user feedback. The process resulted in more than 600 quality reviews that are well-represented in terms of domain within the country.

The combined dataset was combined into walmart_reviews_ca_us_mx.csv, which was used to perform the subsequent sentiment and linguistic analyses in the study.

The integrated output, over all the regions, is plotted as an example below.

3. Analyzing Cross-Country Sentiment

%20(Part%203).jpg)

The interpretive layer of the Multi-Country Review Lens is given in this section, where the harvested textual corpus is processed into comparative emotional, linguistic, and behavioral data markers. Through sentiment classification, lexical visualization, and quantitative index modeling, the analysis reveals how, in the context but also in light of specific cultural and economic conditions, consumer performativity over borders plays out in terms of voice and texture.

Sentiment Distribution Analysis

The first level of interpretation categorizes each review into Positive, Neutral, or Negative depending on the polarity of the text content and the corresponding numeric rating.

The resulting distribution (below) shows obvious differences between national audiences:

- The USA has the highest positivity rate, dominated by 5-star reviews expressing enthusiasm and satisfaction with the product.

- Mexico primarily shows balanced sentiment, which combines appreciation of performance at the same time as sensitivity to pricing and durability.

- Canada has some more critical tones, and it often focuses on technical features and nuances of user experience.

%20(Figure).jpg)

These sentiment ratios show that for even a well-established worldwide product such as the Samsung Galaxy S25 Ultra, perceived value still relies on the cultural context, with regard to affordability, accessibility, communicative tone, and national technological trading space.

Lexical Frequency Visualization

Besides the numeric polarity maps, the frequency map of linguistic polarity exposes the nature of the sentiment expression in detail. The following word clouds (by country) portray respective tokens prevalent in positive or negative reviews and suggest the linguistic construction of user satisfaction or frustration accordingly.

Mexico

- Strong positive reviews include the keywords camera, excellent, photo, and Samsung, which indicates good visual and design credibility.

- Negative reviews include phrases such as remove, update, button, and *screen—*which are utilized by value concerns and usability friction.

.jpg)

USA

- Positive words are focused on galaxy, love, camera, and feature, depicting self-affection and pleasure.

- The negative language contributes trash, failure, sound, and hate into the more emotional reviews as a reflection of American review honesty.

.jpg)

Canada

- Some of the associated words are part, battery, ultra, and performance, which suggest reliability and endurance.

- Negative terms such as Bluetooth, disappointed, problem, and charging indicate the frustration with connectivity and its practical concerns.

.jpg)

Collectively, these lexical portraits are a reflection of psycholinguistic communication styles—the aspirational optimism of Mexico, the expressive polarity of America, and the pragmatically-motivated assessment of Canada.

Index-Based Metrics

To be able to synthesize sentiment and engagement quantitatively, three indices were calculated for each country:

- Satisfaction Index (SI): average normalized rating, the net happiness score.

- Positivity Index (PI): percentage of positive textual Reviews.

- Engagement Index (EI): opinion containing word frequency adjusted by volume (a measure of the intensity of expression).

.jpg)

Key Findings:

- Mexico has the highest Satisfaction (93.5%), indicating high acceptance of the product and a high level of aspirational enthusiasm.

- As for Engagement, the USA is first (138.7%), indicating a stronger expression of opinions/high participation in reviews and higher expressed intensity.

- Canada has balanced values that are moderate and give an indication of pragmatic assessment and restrained emotionality.

4. Interpreting Regional Voices

While the data reveals clear patterns of a quantitative sentiment analysis, a worldly perspective looking at cultural, economic, and behavioral aspects of the linguistic deviations becomes fascinating and provides a deeper understanding of what is going on. This section gives meaning to the role of geography in mediating the expression of consumers, not only what people will say about the Samsung Galaxy S25 Ultra, but why they will say it differently.

4.1 Language as a Socio-Economic Proxy

Language used in Consumer reviews can often be doubled down as a sense of economic situation, plus brand positioning.

Even in the absence of overt references to money, tone as well as vocabulary indicate varying relationships between product, price, and perceived prestige:

- Canada - Stability and Realistic Guarantee

Canadian reviewers rely more on measured, functional terms (“reliable,” “solid,” “works well”), which reflects the stability of the Canadian dollar towards the U.S. dollar and, also, perhaps an optimistic utility orientation that expensive products should dutifully function as advertised.

- USA - Performance Excitement Expressive Ownership

U.S. users show the greatest level of expression, celebrating brand identity and personal connection (“love,” “amazing,” “best phone ever”). The language is a reflection of both consumer self-confidence and the performance-oriented culture that connects technology with self-expression.

- Mexico - Cultural Value Sensitivity and Tone Dependent Selling Style

As if steady in free economic theory, Mexican reviews breathe hesitant, ambivalent economic strain - pleasure recorded belatedly with qualifying cautionary clauses (“worth it,” “expensive but powerful”). Linguistic optimism is the feeling of aspirational purchase behaviour in which the premium brands represent an upward mobility.

These linguistic cues are consistent with market maturity and affordability gradients in countries, making sentiment a nuanced socio-economic signal and not just a reflex emotional response to unexpected earnings results.

4.2 Cultural Politeness and Review Style

The way that dissatisfaction or praise is expressed is indicative of cultural norms of communication and politeness.

Making loose, loose adaptation from Hofstede's cultural dimensions:

- Canada (moderate individualism, high uncertainty avoidance): provides hedged and tempered feedback (“could be better”, “not too bad”), packs a punch less than criticism does in absolute terms.

- USA (high individualism, low power distance): uses direct and exaggerated tone, both approving (“absolutely love it!”) and disapproving (“trash,” “hate this”).

- Mexico (collectivist, high-context): a warm combination of courtesy and expressiveness (even when expressing dissatisfaction (“a bit disappointing but still beautiful”), maintaining harmony);

Review language, a form of digital culture and a digital double-entendre, is an impression that this stylistic courtesy involves a continuation of etiquette, an impression that the language used in review has something to do with nation, with how each of us finds the line between honest and polite.

4.3 Platform Moderation and Regional Framing

Beyond culture, the platform itself is involved in the creation of national discourse. Walmart's country-specific moderation systems, star-rating calibrations, and promotional filters constitute Erin's garden-like ecosystems of varied encounters, which are unique to each linguistic system:

- Stricter U.S. Moderation is inclined to downrank repetitive and/or emotionally extreme content, resulting in polished, high-engagement reviews.

- Canadian moderation is an inclination towards neutrality as it is an abbreviated and considered reviewer-based.

- Mexican listings, translations made by locals to do a rendition sooner, in later years, are more sloppy, using more synonyms, and containing phrases that are slangy or in local speech.

This infrastructural symmetry leads to platform bias, which unearths implicit trends on how freedom of speech is exercised. This forms platform bias due to infrastructural asymmetry shaping such dynamics and subtly exposing what has come to be known as “public opinion”. Review ecosystems, such as markets themselves, are area-tuned.

4.4 Comparing Human and Model Geography

This human inter-country analysis is similar to the regional variation in AI language models discussed in the previous article. Similarly to how big language models are prone to localised syntax and sentiment biases, human annotators express their own cultural biases through their choice of words, their tone, and their style of assessment.

In this sense, the consumer acts as a mechanical organic model, which integrates in common the wholesale alphabet of economic optimism, cultural courtesy, and language pulse.

Their reviews are, of course, not mere reactions to simply a device, but are living datasets of social geography that tell us in this global marketplace that every sentence carries the fingerprint of where it was written.

Conclusion: Toward a Geography of Consumer Language

In this article, we show that consumer reviews of the Samsung Galaxy S25 Ultra vary systematically by region, not only in respect of sentiment polarity, but also in respect of tone, lexical patterns, and inflection point.

Whereas American critics insist upon emotional expressiveness and performance-based gushing, Canadian consumers are pragmatic and restrained, while Mexican consumers are a blend of admiration and economic caution. This is why the digital feedback is so specific: it reflects the way people use the language in different societies, the economic reality in people's lives, and the expectations each audience has of the products and services.

Apart from its descriptive function, such cross-regional sentiment analysis has predictive value. Variations in the index of satisfaction and the intensity of engagement can be used as an early warning sign of market adaptation success, indicating the compatibility between global product and local expectations and purchasing sensibility. Where sentiment is negative, then marketing needs to be recalibrated, or pricing needs to be recalibrated, but where engagement is positive, brand resonance is highest.

Methodologically, this project further develops a maximalist, proxy-based architecture for ethical global review mining that can compare public conversations throughout the enclave nation markets without intrusive data collection. By combining automated sentiment models, lexical analysis, and cultural interpretation, it enables reproducible, transparent, and integrative large-scale study of regional voice.

At a higher level, these results imply that a human's geography of opinion is aligned with the geography of aligned AI. As the multiple authors of this publication have documented, machine models and human reviewers have locally tuned linguistic behaviours which are influenced by culture, moderated by infrastructure, and shared by common digital platforms.

The fascination of this convergence is critical to creating systems, human or artificial, that are globally aware yet linguistically and culturally rooted in place for the future.

I'm a software engineer with experience in designing, developing, and testing software for desktop and mobile platforms.

.jpg)